If you are preparing for a DevOps interview or for a Terraform administrator or a developer, consider this Pass Terraform Certification with top Terraform Interview Questions and Answers tutorial as your lucky friend, which will help you pass the Certificate or the Terraform interview.

Without further delay, let’s get into this UltimatePass Terraform Certification with the top Terraform Interview Questions and Answers guide.

PAPER-1

Q1. What is IAC ?

Answer: IAC stands for Infrastructure as a code which allows to write code, check the code, compile code then execute the code and if required update the code again and redeploy. It is easier to use IAC as you can create and destroy the infrastructure quickly and efficiently.

Q2. Are there any benefits of Infrastructure as Code ?

Answer: Yes there are lot many. IAC allows you to automate multiple things such as with one script with same syntax throughout you can update, scale up-down and destroy the resources quickly. Infrastructure as a code has also capabilities to reuse the code and version it in version control. Terraform is an Infrastructure as code open source tool.

Q3. What are use cases of Terraform ?

Answer: There are multiple use cases of Terraform such as:

- Heroku App Setup – PAAS based application

- Multi Tier apps ( For ex: web apps + DB + API + Caching )

- Disposable environments such as DEV and Stage for testing purpose.

- Multi cloud deployment.

- Resource schedulers such as Kubernetes , Borg which can schedule containers , Spark etc.

Q4. What is Terraform state file ?

Answer: Terraform state file maintains the status of your infrastructure such as resource which are provisioned or needs to be provisioned. When you run Terraform plan command a JSON structured output is generated (initially empty) and when you deploy all the resources ID and other details come in JSON file .

Q5. What are different format of Terraform configuration file?

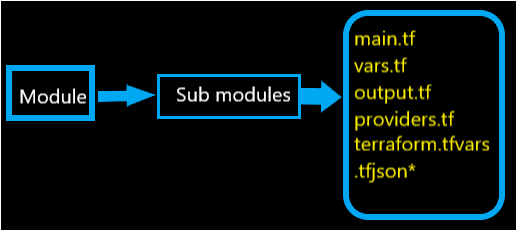

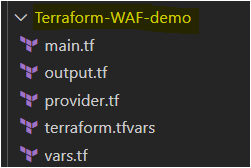

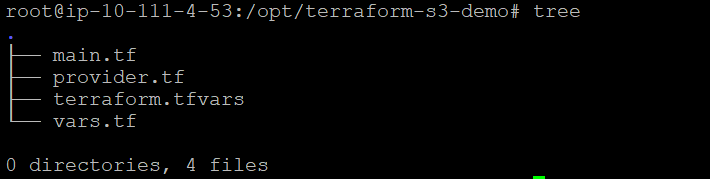

Answer: The format of Terraform configuration file is .tf or .tf.json. Some of the example of Terraform configuration file are main.tf, vars.tf, output.tf, terraform.tfvars , provider.tf etc.

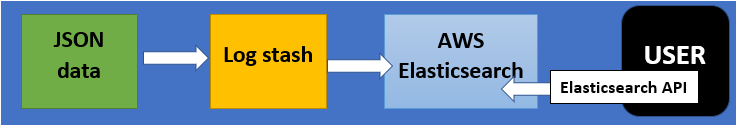

Q6. What are Terraform Providers ?

Answer: Terraform Providers are most important part of terraform which allow to connect to remote systems by the help of API’s. There are different Terraform providers such as google provider, terraform aws provider, terraform azure provider , Oracle, MySQL , Postgres etc.

Q7. Name three Terraform provisioners that are used in Terraform ?

Answer: Terraform provisioners: Local exec , Remote exec and File.

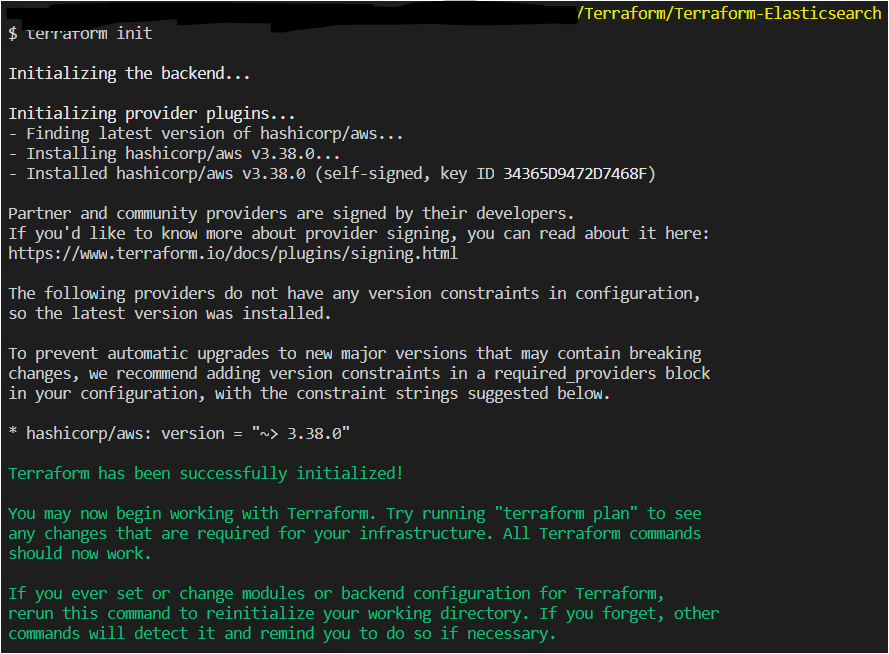

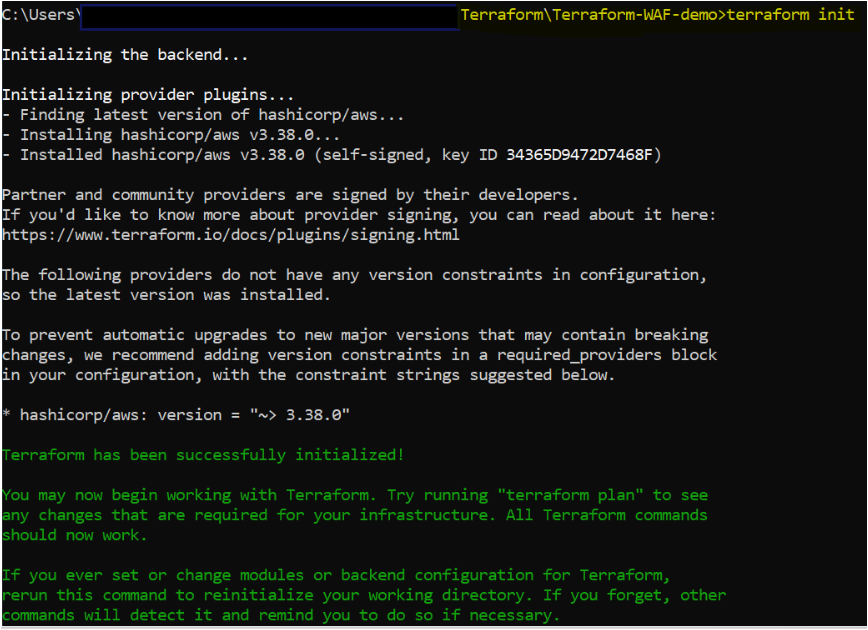

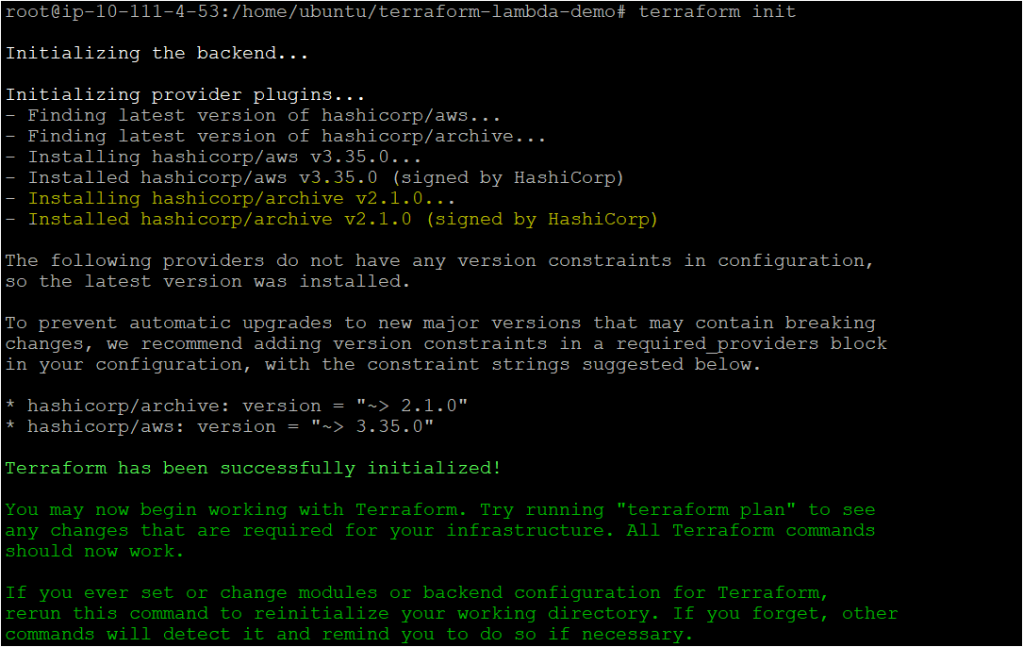

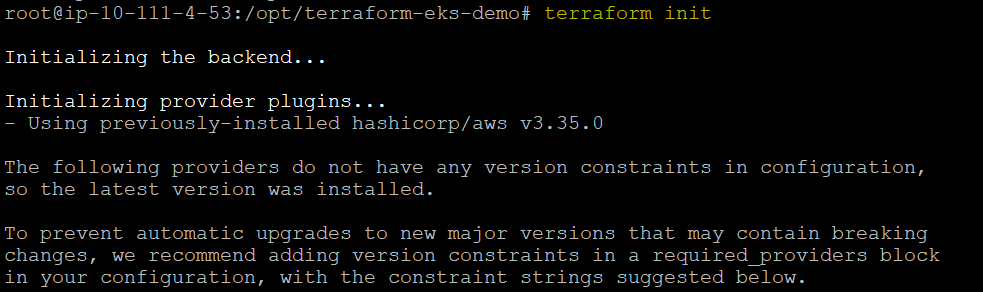

Q8. What happens when you run Terraform init ?

Answer: Terraform init allows all the Terraform modules and Terraform providers to initialize with latest version if there are no dependency locks.

Q9. How do you define Terraform provider version ?

Answer:

terraform {

required_providers {

aws = "~> 1.0" }

}

Q10. How to update Terraform provider version?

Answer:

terraform init --upgrade

Q11. What is the other way to define Terraform provider other than in Terraform Block?

Answer:

provider {

version = "1.0"

}

Q12. In case you have two Terraform providers with same name but need to deploy resources in different regions, What do you do in that case?

Answer: Use alias to solve this issue.

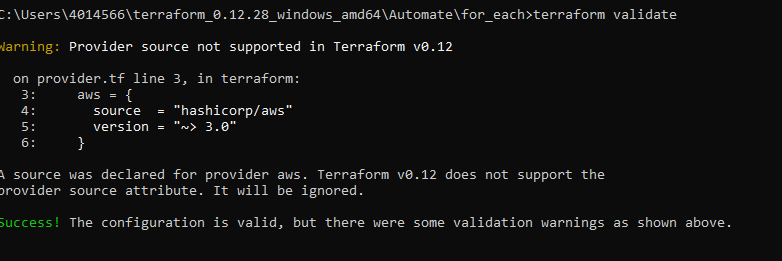

Q13. How do you format and validate Terraform configuration files?

Answer: Use command terraform fmt and terraform validate

Q14. What is Command to Check the current status of infrastructure applied and how you can list resources from your state file?

Answer: terraform show and terraform state list

Q15. What is difference between local exec and remote exec Terraform provisioners?

Answer: local exec is used to run the commands locally on your system like output on the terminal while running terraform plan command and remote exec is to execute remotely on the resources such as EC2.

Q16. What are the two types of connections used while you use remote exec Terraform provisioner?

Answer: SSH or Winrm

Q17. When does Terraform mark the resources as tainted ?

Answer: When resources are created successfully but fails during provisioning. Terraform represents this by marking the object as “tainted” in the Terraform state, and Terraform will propose to replace it in the next plan you create.

Q18. What happens to tainted resource when you run Terraform Plan next time ?

Answer: Terraform ignores them as they are risking objects and will create or replace new resources instead.

Q19. How to manually taint a resource and does taint modify your infrastructure ?

Answer: You can use terraform taint command followed by resource.id. No, only state file is modified.

Q20. How to By Pass any failure in Terraform apply ?

Answer: You can use on_failure setting. Never continue if you thing this failure can cause issues.

PAPER-2

Q1. What does the version = “~ > 1.0 ” mean ?

Answer: It means any version greater than 1 but less than 2.0

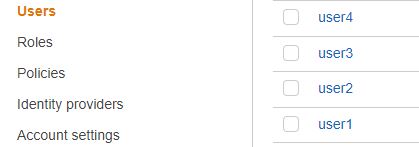

Q2. What is more secure practice in terraform ? Using hard coded credentials or Instance profile ?

Answer: Instance Profile.

Q3. How can you remove resource that failed while terraform apply without affecting entire infrastructure ?

Answer: We can use terraform taint resource.id

Q4. What is Terraform workspace and what is default Terraform workspace name?

Answer: Terraform workspace is used to store the permanent data inside terraform state file in backend and by default there is only one terraform state file and if you would like to have multiple terraform state file associated with one backend then you need workspaces. By default there is only one workspace named as default.

Q5. What is the command to list the Terraform workspaces and create new Terraform workspace. ?

Answer: terraform workspace list and terraform workspace new *new_workspace*

Q6. Can you delete default Terraform workspace ?

Answer: No, you cannot delete default Terraform workspace.

Q7. If you want to create one resource in default Terraform workspace and other five resource in different terraform workspace using count, then how can you achieve this?

Answer: Run the below command

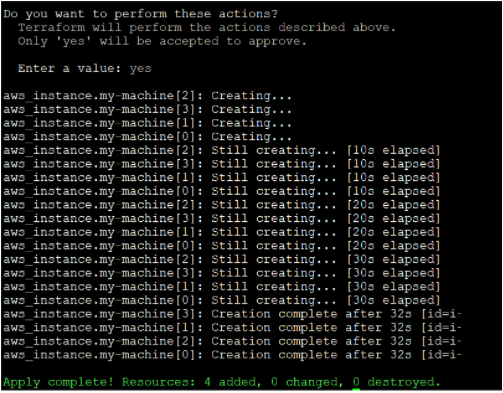

resource "aws_instance" "mymachine" {

count = "${terraform.workspace == "default" ? 1 : 5 } "

}

Q8. How can you check a single resource attribute in state file?

Answer: terraform state show ‘resource name’.

Q9. How can you bring state file locally on machine and upload to remote location ?

Answer: terraform state pull – To bring the state file to local machine and terraform state push to manually upload the state file to remote location such as S3 bucket in AWS.

Q10. How to remove items from Terraform state file?

Answer: terraform state rm “packet_device.worker”

Q11. How to move items from Terraform state file?

Answer: To move items within Terraform state file run the below command.

terraform state mv 'module.app' 'module.parent.module.app'

Q12. Where are Terraform modules located ?

Answer: Terraform modules can be stored in repositories such as AWS S3 bucket, GIT, local filesystem or Terraform Registry.

Q13. Where are your Terraform providers located ?

Answer: Within the Terraform registry.

Q14. What is the command to check the current status of infrastructure applied and how you can list resources from your state file?

Answer: terraform show and terraform state list

Q15. How do you download Terraform modules in a file ?

Answer: Using module block containing source and version.

Q16. What are terraform Module ?

Answer: Terraform module contains set of Terraform configuration files in a single directory and allows others to reuse for simplicity and ease.

Q17. What is “${}” know as ?

Answer: “${}” is interpolation that was used with previous versions and still can be used.

Q18. What is default data type in Terraform ?

Answer: String.

Q19. What does .terraform directory contains?

Answer: .terraform directory stores downloaded packages and plugins and Terraform provider details.

Q20. What are Core Terraform commands?

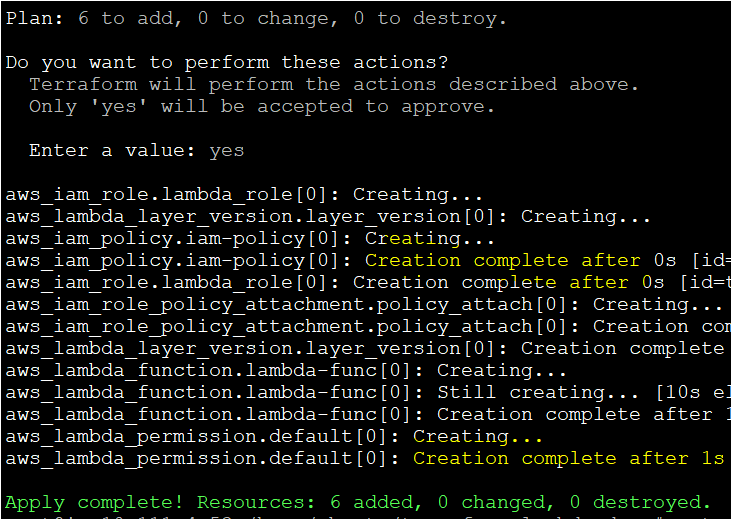

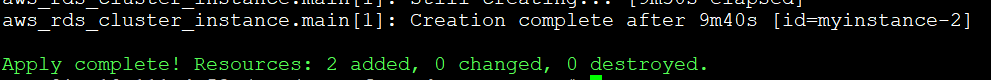

Answer: terraform init ➔ terraform plan ➔ terraform apply

PAPER-3

Q1. How do you protect any Terraform provisioner to fail on terraform apply ?

Answer: By using on_failure settings as shown below.

resource "aws_instance" "web" {

provisioner "local-exec" {

command = "echo The server's IP address is ${self.private_ip}"

on_failure = "continue" # This will ignore the error and continue with creation or destruction or

fail = It will Raise an Error

}

}

Q2. Is it Possible to skip Terraform backend ? If Yes, then how?

Answer: Yes you can skip terraform backend by running below command. command

terraform init -backend=false

Q3. How can you remove Plugin installation while initializing the terraform?

Answer: By running the following commands.

terraform init -get-plugins=false

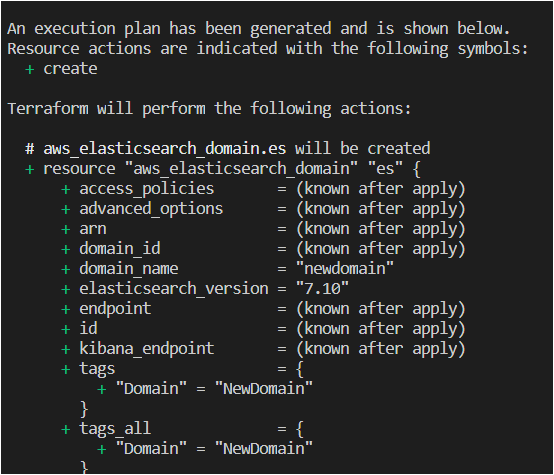

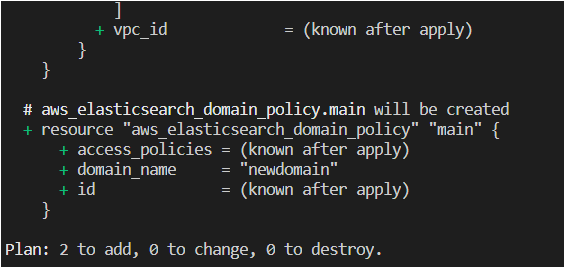

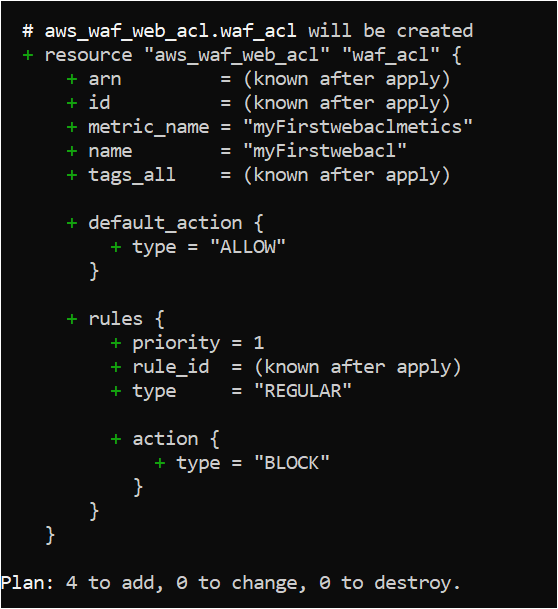

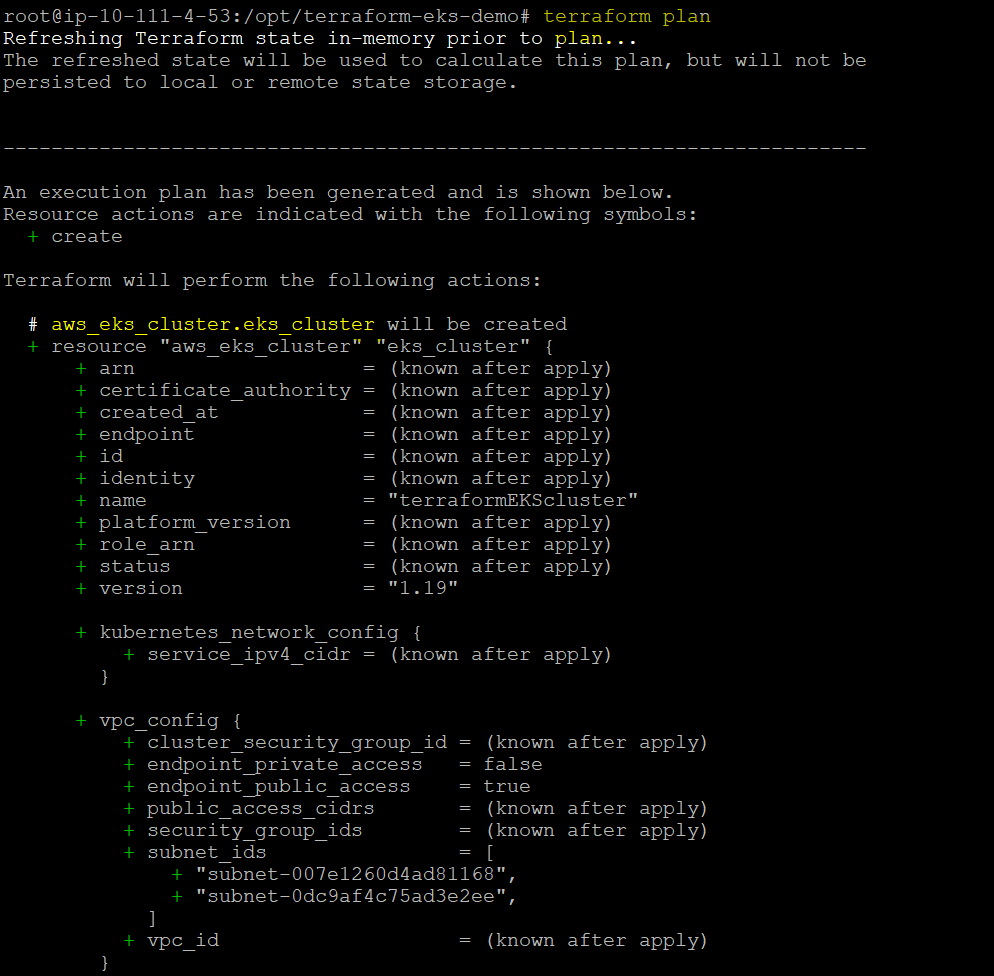

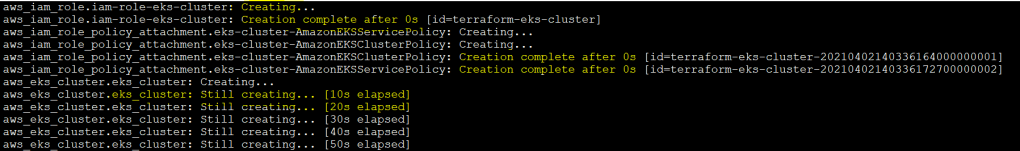

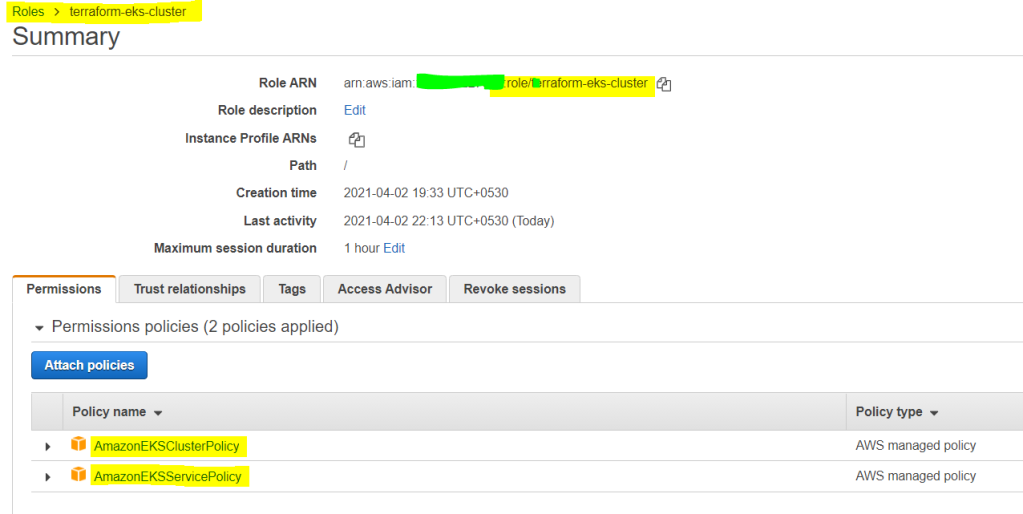

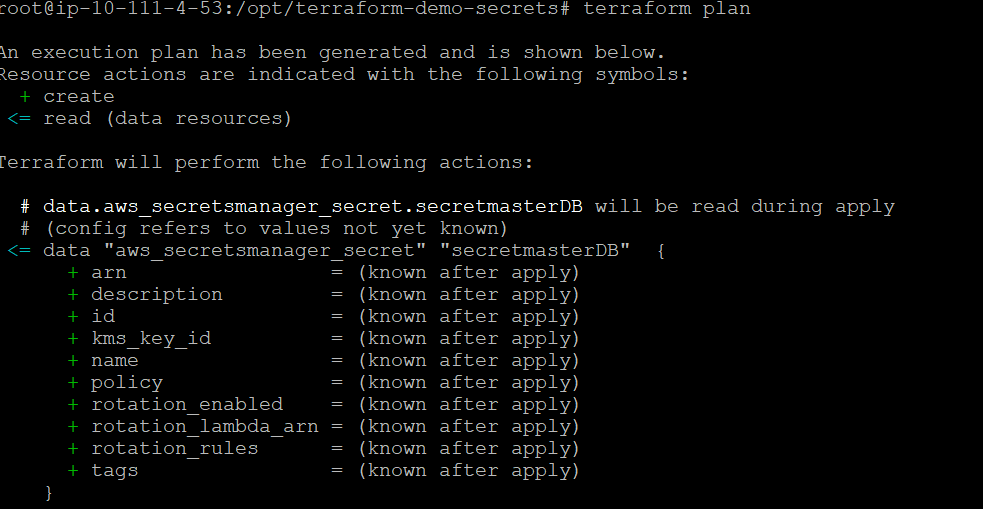

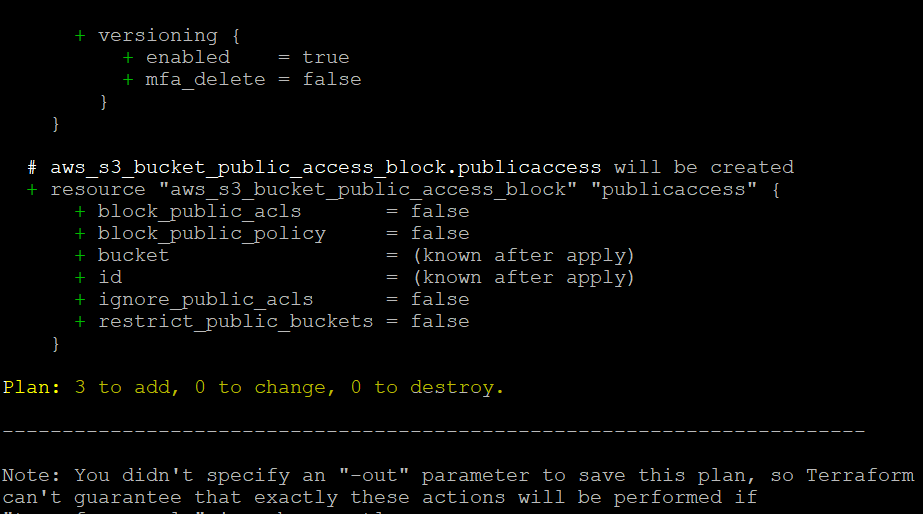

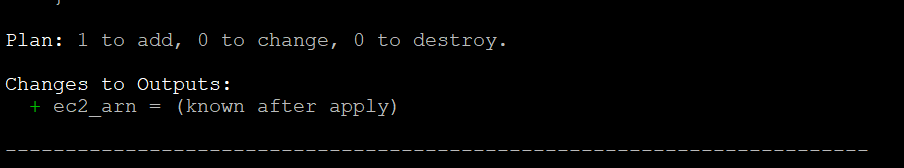

Q4. What is the use of terraform plan command ?

Answer: Terraform plan command helps in creation of execution plan and determines which actions are necessary to achieve the desired state.

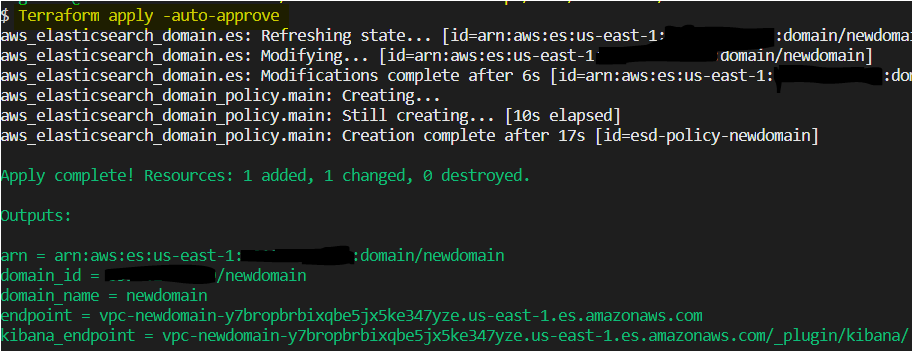

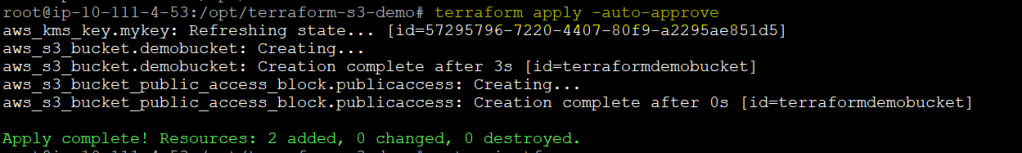

Q5. How can you allow terraform to self approve and deploy the infrastructure ?

Answer: Using below command.

terraform apply -auto-approve

Q6. How can you preview the behavior of terraform destroy command ?

Answer: Use the below command that will inform which resources will be destroyed.

terraform plan -destroy

Q7. How can you save the execution Plan ?

Answer: Save the execution Plan by using below command.

terraform plan -out=tf-plan

Q8. How can you see single attribute in state file?

Answer: By using below command.

terraform state show 'resource name'.

Q9. How can you get detailed exit code while running plan in Terraform ?

Answer: By adding the -detailed-exitcode in terraform plan command.

terraform plan -detailed-exitcode.

Q10. If you remove EC2 instance manually from AWS console which was created by terraform. What happens when you run terraform apply command next time? Does terraform recreate it ?

Answer: Yes , it does recreate it as this is already defined in state file.

Q11. What are Terraform backends?

Answer: Terraform backend determines where Terraform state is stored or loaded from. By default it is stored on local machine but you can also give remote backed such as AWS S3 bucket.

Q12. What do you mean by state lock ?

Answer: State lock gets applied as soon as you work on the resource. It helps in corruption of your state file.

Q13. Can you revert from remote backend to Local backend ? If yes then what next needs to be done?

Answer: Yes you can revert from remote backend to local backend by configuring in Terraform configuration file and later running terraform init command.

Q14. What is Command to Sync or reconcile your terraform state file if you modify terraform created resource manually?

Answer: Use Terraform refresh command.

Q15. Can you use output generated from one Terraform module to other Terraform module? If yes how?

Answer: Yes the output generated from one Terraform module can be used in other Terraform module. You can define in module block by specifying the source and version and then use it.

Q16. What is correct approach for declaring meta argument ? a = “${a}” or “${}” = a

Answer: a = “${a}” is correct way to use meta arguments. Now interpolation is used very rarely.

Q17. Name some important Data types in Terraform ?

Answer: String , lists , set, map , tuple , bool, number and object.

Q18. How do you convert built in function from String to number ?

Answer: parseint(“100″,”10”)

Q19. Which built in function evaluates expression and return Boolean result ?

Answer: can function.

Q20. How can you encode built in function to a string using JSON Syntax ?

Answer: jsonencode({“hello”=”America”})

Conclusion

In this ultimate guide(Pass Terraform Certification with top Terraform Interview Questions and Answers), you had a chance to revise everything you needed to pass and crack the Terraform interview.

Now that you have sound knowledge of Terraform, and are ready for your upcoming interview.