Amazon Rekognition is a cloud based image and video analysis service that allows you to add computer vision capabilities to your applications. It analyse the images and further stores in Amazon S3 bucket.

This service is highly scalable, deep learning technology developed by Amazon’s computer vision scientists.

You can add features that detect objects, text, unsafe content, analyze images/videos, and compare faces to your application using Rekognition’s APIs.

A label refers to any of the following: objects (tree, or table), events ( a wedding, or birthday party), concepts (a landscape, evening, and nature) or activities (running or playing basketball). Amazon Rekognition can detect labels in images and videos.

Use Case of Amazon Rekognition

Some of the use cases of Amazon Rekognition are:

- Face Liveness detection.

- Face based user identity verification.

- Searchable media libraries.

- Facial search.

- Celebrity Recognition.

- Text Detection.

Benefits of Amazon Rekognition

Some of the benefits of Amazon Rekognition are:

- Integrating powerful image and video analysis into your app

- Deep learning-based image and video analysis

- Analyze and filter images based on properties

- Integration with other AWS services

Types of Analysis in Amazon Rekognition

Some of the analysis are: DetectModerationLabels, StartMediaAnalysisJob, GetMediaAnalysisJob, ListMediaAnalysisJobs

IAM policy to allow to use Amazon Rekognition console.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "RekognitionFullAccess",

"Effect": "Allow",

"Action": [

"rekognition:*"

],

"Resource": "*"

},

{

"Sid": "RekognitionConsoleS3BucketSearchAccess",

"Effect": "Allow",

"Action": [

"s3:ListAllMyBuckets",

"s3:ListBucket",

"s3:GetBucketAcl",

"s3:GetBucketLocation"

],

"Resource": "*"

},

{

"Sid": "RekognitionConsoleS3BucketFirstUseSetupAccess",

"Effect": "Allow",

"Action": [

"s3:CreateBucket",

"s3:PutBucketVersioning",

"s3:PutLifecycleConfiguration",

"s3:PutEncryptionConfiguration",

"s3:PutBucketPublicAccessBlock",

"s3:PutCors",

"s3:GetCors"

],

"Resource": "arn:aws:s3:::rekognition-custom-projects-*"

},

{

"Sid": "RekognitionConsoleS3BucketAccess",

"Effect": "Allow",

"Action": [

"s3:ListBucket",

"s3:GetBucketLocation",

"s3:GetBucketVersioning"

],

"Resource": "arn:aws:s3:::rekognition-custom-projects-*"

},

{

"Sid": "RekognitionConsoleS3ObjectAccess",

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:HeadObject",

"s3:DeleteObject",

"s3:GetObjectAcl",

"s3:GetObjectTagging",

"s3:GetObjectVersion",

"s3:PutObject"

],

"Resource": "arn:aws:s3:::rekognition-custom-projects-*/*"

},

{

"Sid": "RekognitionConsoleManifestAccess",

"Effect": "Allow",

"Action": [

"groundtruthlabeling:*",

],

"Resource": "*"

},

{

"Sid": "RekognitionConsoleTagSelectorAccess",

"Effect": "Allow",

"Action": [

"tag:GetTagKeys",

"tag:GetTagValues"

],

"Resource": "*"

},

{

"Sid": "RekognitionConsoleKmsKeySelectorAccess",

"Effect": "Allow",

"Action": [

"kms:ListAliases"

],

"Resource": "*"

}

]

}

Detecting Objects and Scenes

You can upload an image that you own or provide the URL to an image as input in the Amazon Rekognition console. Amazon Rekognition returns the object and scenes, confidence scores for each object, and scene it detects in the image you provide. Lets checkout quickly how to detect objects and scenes.

- Open the Amazon Rekognition console at https://console.aws.amazon.com/rekognition/.

- Choose Label detection.

- Do one of the following:

- Upload an image – Choose Upload, go to the location where you stored your image, and then select the image.

- Use a URL – Type the URL in the text box, and then choose Go.

- View the confidence score of each label detected in the Labels | Confidence pane.

Analyzing Faces using Amazon

To analyze a face in an image you provide

- Open the Amazon Rekognition console at https://console.aws.amazon.com/rekognition/.

- Choose Facial analysis.

- Do one of the following:

- Upload an image – Choose Upload, go to the location where you stored your image, and then select the image.

- Use a URL – Type the URL in the text box, and then choose Go.

- View the confidence score of one the faces detected and its facial attributes in the Faces | Confidence pane.

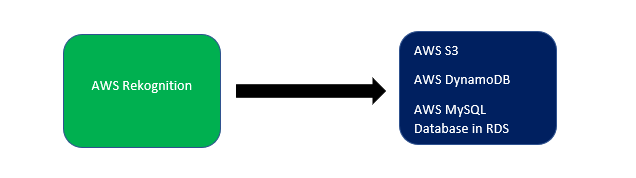

Storing Amazon Rekognition Data with Amazon RDS and DynamoDB, AWS S3

When using Amazon Rekognition’s APIs, it’s important to remember that the API operations don’t save any of the generated labels. You can save these labels by placing them in database.

Creating an Amazon Rekognition Lambda functions

In this section we will create two lambda functions along with SNS topic, bucket, Dynamodb table.

Lets quickly checkout the use case here. A user uploads a video to AWS S3 then Lambda function automatically starts analysis of a video by calling StartLabelDetection through Amazon Rekognition video to start detecting labels in the uploaded video. Further when analysis is completed it is sent to the registered Amazon SNS topic and SNS topic triggers second Lambda function and it calls GetLabelDetection to get the analysis results. The results are then stored in a database in preparation for displaying on a webpage.

Lets checkout the steps we need to take for above use case step by step:

- Create the AWS S3 bucket.

- Create the SNS topic and prepend the topic name with AmazonRekognition. Assign the AmazonRekognitionServiceRole policy to the role. IAM service role to give Amazon Rekognition Video access to your Amazon SNS topics.

- Create the lambda function and configure it by following steps.

- Choose Create function.

- In Execution role, choose Create a new role with basic Lambda permissions.

- choose + Add trigger. In Trigger configuration choose SNS.

- Create a lambda role and attach Choose AmazonRekognitionFullAccess policy for first lambda role.

- Also require pass role permissions for the IAM service role that Amazon Rekognition Video uses to access the Amazon SNS topic. below

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "mysid",

"Effect": "Allow",

"Action": "iam:PassRole",

"Resource": "arn:servicerole"

}

]

}

Conclusion

In this article you read everything about image and video analysing AWS service that is Amazon Rekognition.