What is Kinesis ?

Kinesis makes it easy to collect, process and analyze the streaming data in real time. Ingest real time data such as Application logs, metrics, website clickstreams, IOT telemetry data.

Kinesis Components

Kinesis is real time big data streaming data at any scale. There are four components that are involved in Amazon kinesis:

- Kinesis data stream: It allows you to capture and store data streams, ingest data from any sources.

- Kinesis data firehose: Process and Loads data into AWS S3, Redshift, Elastic search ( AWS data stores)

- Kinesis data analytics: perform real time data analytics on streams using SQL or apache flink.

- Kinesis video streams: capture, process, store and monitor real time video streaming.

What is Kinesis Data Streams?

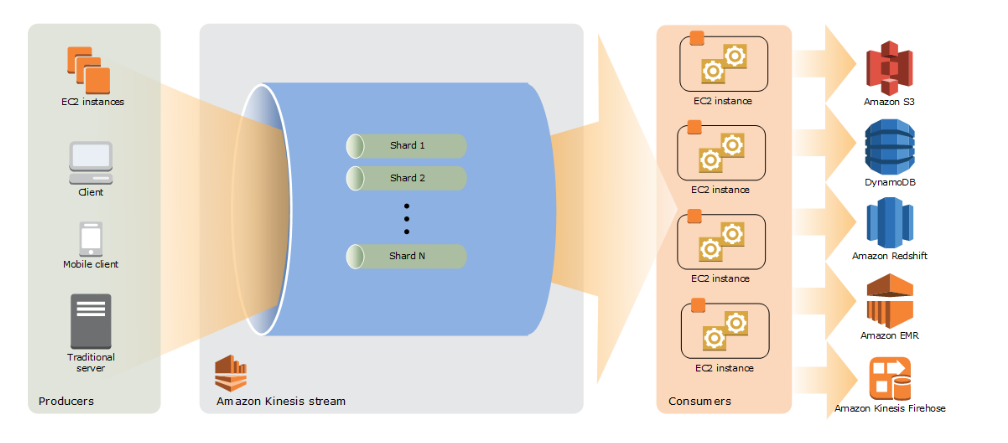

Amazon Kinesis Data Streams collects and process large streams of data records by using data-processing applications, known as Kinesis Data Streams applications.

Kinesis Data Streams application reads data from a data stream as data records. These applications can use the Kinesis Client Library, and they can run on Amazon EC2 instances.

You can send the processed records to dashboards, use them to generate alerts, dynamically change pricing and advertising strategies, or send data to a variety of other AWS services

The producers continually push data to Kinesis Data Streams, and the consumers process the data in real time. Consumers (such as a custom application running on Amazon EC2 or an Amazon Kinesis Data Firehose delivery stream) can store their results using an AWS service such as Amazon DynamoDB, Amazon Redshift, or Amazon S3.

Some of the important points are:

- Retention is between 1 day to 365 days.

- Ability to reprocess data however once data is inserted in kinesis, it cannot be deleted.

- Producers use AWS SDK, Kinesis producer library ( KPL ), kinesis agent.

- Consumers use Kinesis client library (KCL), AWS SDK.

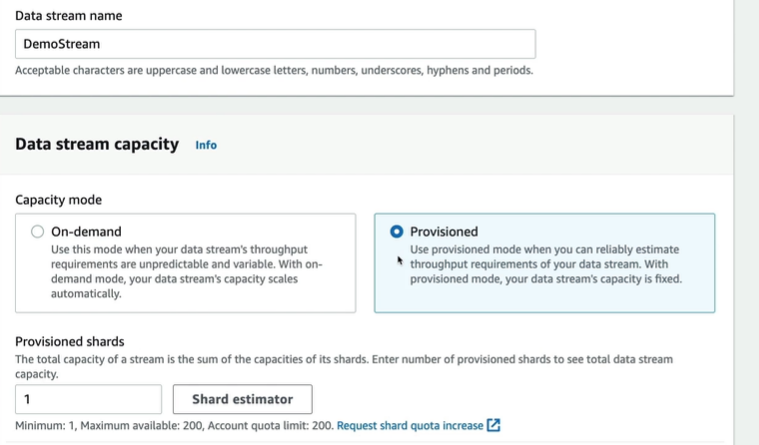

- There are two kinesis data stream capacity modes such as provisioned mode and on demand mode.

AWS Kinesis Data Streams Security

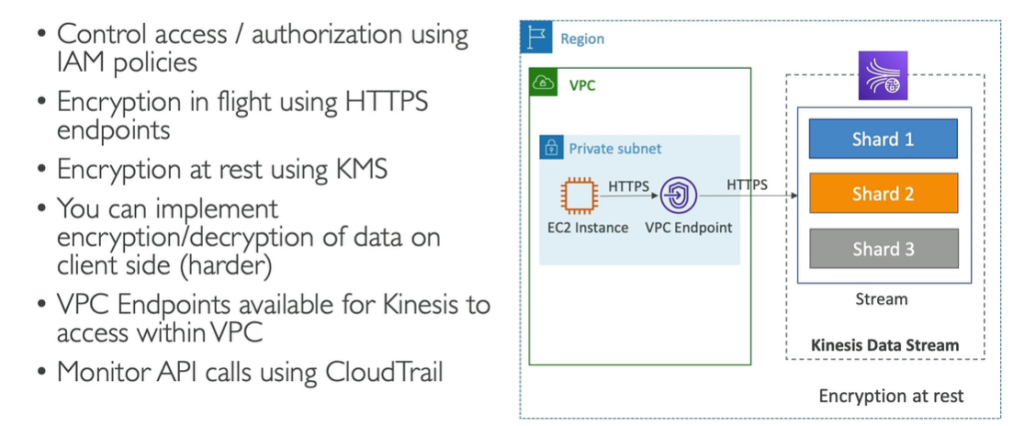

In terms of security for Kinesis Data Streams, it is deployed within a region. And so you have your shards. You can control access to produce and read from the shard using IAM policies. There is encryption in light using HTTPS, and encryption at rest using KMS.

You can implement your own encryption and decryption of data on the client side, which is called client side encryption, and it is harder to implement because you need to encrypt the data yourself and encrypt it yourself. But this enhances security. VPC endpoints are available for Kinesis. This allows you to access Kinesis directly from HTTPS, for instance in a private subject without going through the internet.

And finally, all the API calls can be monitored using CloudTrail. So that’s it for an overview of Kinesis Data Streams. I hope you liked it. And I will see you in the next lecture for a deeper dive on all the moving parts in Kinesis Data Streams.

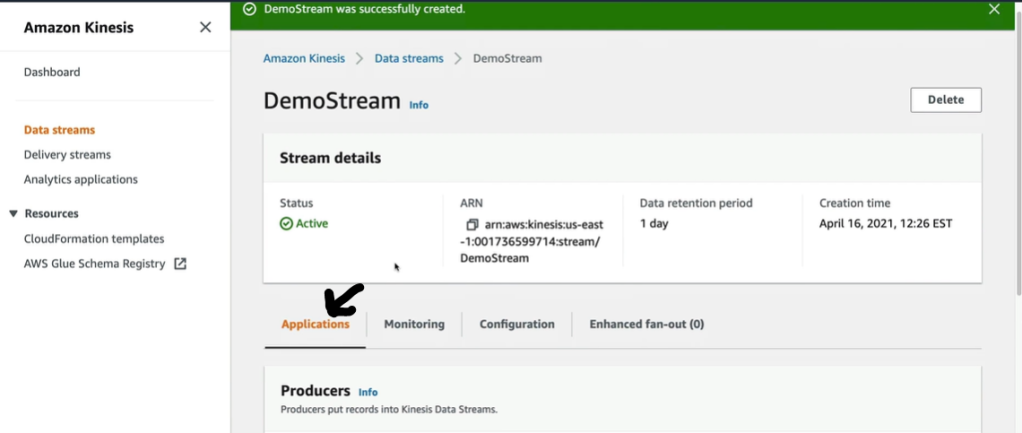

Creating Kinesis Data streams in AWS console.

To create kinesis data streams in AWS console, follow the below steps.

- Navigate to AWS console and search for kinesis. Here select Kinesis Data streams and click on Create data streams.

- Under Applications you will see producers and consumers as shown below.

- Next we can run commands to produce the message and consumer can consume the message as shown below.

What is Kinesis Data Firehose?

- Kinesis data firehose is fully managed service with no administration ,autoscaling and serverless.

- Pay only for data going through firehose.

- Near real time and latency is also very less.

- It supports many data formats, conversions, transformations, compression. It also supports custom data transformation using AWS Lambda.

- Data firehose is used to deliver real time streaming data to AWS S3, AWS Redshift, Amazon OpenSearch, Splunk or any HTTP Endpoints, third party providers such as Splunk, Dynatrace or data dog.

- It can also transform the data.

- With Kinesis Data Firehose, you configure producers such as AWS EC2, WAF, Logs etc. to send data to Data firehose and which automatically delivers the data to the destination.

- For Amazon Redshift destinations, streaming data is delivered to your S3 bucket first. Kinesis Data Firehose then issues an Amazon Redshift COPY command to load data from your S3 bucket to your Amazon Redshift cluster.

- You can also configure Kinesis Data Firehose to transform your data before delivering it.

- Kinesis Data Firehose supports Amazon S3 server-side encryption with AWS Key Management Service (AWS KMS) for encrypting delivered data in Amazon S3.

- If data transformation is enabled, Kinesis Data Firehose can log the Lambda invocation, and send data delivery errors to CloudWatch Logs.

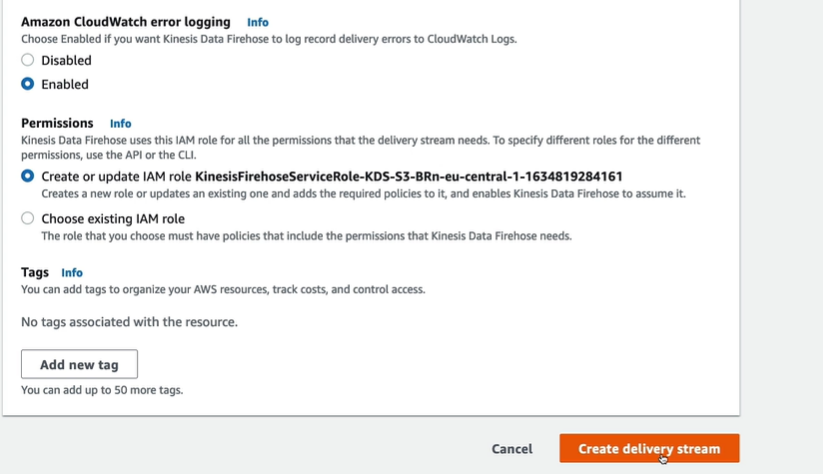

- Kinesis Data Firehose uses IAM roles for all the permissions that the delivery stream needs such as access to various services, including your S3 bucket, AWS KMS key (if data encryption is enabled), and Lambda function (if data transformation is enabled)

Kinesis Data Streams vs Kinesis Data Firehose.

Note: Kinesis vs SQS Ordering: With kinesis data streams we can have multiple and parallel queues however for SQS we can only have one SQS FIFO queue.

AWS SQS vs AWS SNS vs AWS Kinesis

Below is the difference between AWS SQS vs AWS SNS vs AWS Kinesis.

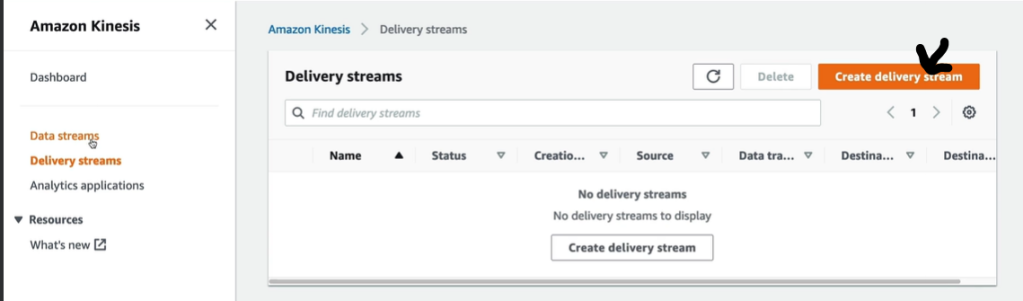

Launching Kinesis Data Firehose using Kinesis delivery stream

You create data delivery stream so that you can send your data to this delivery stream. You can use data using Kinesis data firehose using kinesis delivery streams.

- First thing is to create a delivery stream for using kinesis data firehose.

- Delivery stream name is automatically selected.

- Select the destination settings.

- You can enable transformation using Lambda function else keep it disabled.

- Finally create the delivery stream.

- After you upload or add records in kinesis then the data appears in the AWS S3 buckets.

Records

- The data of interest that your data producer sends to a Kinesis Data Firehose delivery stream

Producers

- Producers send records to Kinesis Data Firehose delivery streams.

- You can also configure your Kinesis Data Firehose delivery stream to automatically read data from an existing Kinesis data stream and load it into destinations.

- You can create a new Data stream and then select the Data streams instead of DIRECT PUT.

- Now to retrieve from Data streams into Firehose you need Amazon Kinesis agent which is a standalone Java software application that offers an easy way to collect and send data to Kinesis Data Firehose .

- You can install the agent on Linux-based server environments such as web servers, log servers, and database servers. The agent can pre-process the records parsed from monitored files before sending them to your delivery stream.

sudo yum install –y aws-kinesis-agent

- To configure agent Open and edit the configuration file (as superuser if using default file access permissions).

/etc/aws-kinesis/agent.json

sudo service aws-kinesis-agent start

- The IAM role or AWS credentials that you specify must have permission to perform the Kinesis Data Firehose PutRecordBatch operation for the agent to send data to your delivery stream.

{

"flows": [

{

"filePattern": "/tmp/app.log*",

"deliveryStream": "yourdeliverystream"

}

]

}

Example:

{

"flows": [

{

"filePattern": "/tmp/app.log*",

"deliveryStream": "my-delivery-stream",

"dataProcessingOptions": [

{

"optionName": "LOGTOJSON",

"logFormat": "COMMONAPACHELOG"

}

]

}

]

}

How to create Kinesis Firehose delivery Stream with Dynamic partitioning enabled

- Navigate to the Amazon Kinesis and click on Delivery streams

- Next choose the S3 bucket as the Destination. Here, we selected Dynamic partitioning as Not enabled.

Note: Dynamic partitioning enables you to create targeted data sets by partitioning streaming S3 data based on partitioning keys. You can partition your source data with inline parsing and/or the specified AWS Lambda function. You can enable dynamic partitioning only when you create a new delivery stream. You cannot enable dynamic partitioning for an existing delivery stream.

- Select the Amazon Cloud watch error logging as enabled and also create a new IAM role.

Kinesis Data Firehose uses Amazon S3 to backup all or failed only data that it attempts to deliver to your chosen destination. You can specify the S3 backup settings

- If you set Amazon S3 as the destination for your Kinesis Data Firehose delivery stream and you choose to specify an AWS Lambda function to transform data records or if you choose to convert data record formats for your delivery stream

- If you set Amazon Redshift as the destination for your Kinesis Data Firehose delivery stream and you choose to specify an AWS Lambda function to transform data records.

- If you set any of the following services as the destination for your Kinesis Data Firehose delivery stream: Amazon OpenSearch Service, Datadog, Dynatrace, HTTP Endpoint, LogicMonitor, MongoDB Cloud, New Relic, Splunk, or Sumo Logic.

When you send data from your data producers to your data stream, Kinesis Data Streams encrypts your data using an AWS Key Management Service (AWS KMS) key before storing the data at rest.

When your Kinesis Data Firehose delivery stream reads the data from your data stream, Kinesis Data Streams first decrypts the data and then sends it to Kinesis Data Firehose.

Writing to Kinesis Data Firehose delivery stream using Cloud Watch Events

- On CloudWatch page, click on rules.

- Once we click on rules, then create rules, provide the Source and Target details.

Sending Amazon VPC Logs to Kinesis Data Firehose Delivery Stream ( Splunk) using Cloud Watch

- On AWS VPC, create a VPC flow log with Destination as Cloud Watch Log group.

- In Cloud watch service Create Log group and choose Log groups.

- Create a Kinesis Data Firehose Delivery Stream with Splunk as a Destination.

- Now, create CloudWatch subscription which will send all the CloudWatch logs to delivery stream.

aws logs put-subscription-filter --log-group-name "VPCtoSplunkLogGroup" --filter-name "Destination" --filter-pattern "" --destination-arn "arn:aws:firehose:your-region:your-aws-account-id:deliverystream/VPCtoSplunkStream" --role-arn "arn:aws:iam::your-aws-account-id:role/VPCtoSplunkCWtoFHRole"

What is Kinesis Data Analytics

Kinesis data analytics allows you to perform real time analytics on Kinesis Data streams and firehose using SQL. It also adds the reference data from Amazon S3 to enrich the streaming data.

It is fully managed with no servers to provision, automatic scaling and pays for actual consumption rate. They are primarily used for time series analytics , real time dashboards, real time metrics.

What is Amazon Managed service for Apache Flink ( Kinesis Data Analytics for Apache Flink )

With this you can easily process and analyze streaming data. You can run any apache Flink application on managed cluster on AWS.

You can create streaming application using Apache Flink with ( Java, Python or ) language.