kubernetes scheduler

Kubernetes adds the property nodeName automatically in the manifest file. kubernetes scheduler than identifies the right node to assign the pod.

Pod is assigned to a node only at the time of creation. But if you need to assign pod to a node after pod is created , kubernetes property nodeName will not allow rather create a binding object and call a binding kubernetes API.

- The file name is Pod-bind-definition.yaml. The file below schedules the pod into the node01 node.

---

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

nodeName: node01

containers:

- image: nginx

name: nginx

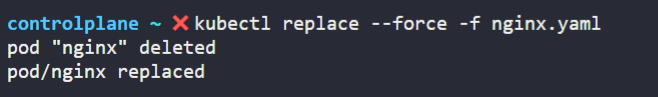

kubectl replace --force -f nginx.yaml

Table of Content

- Kubernetes Scheduler

- Kubernetes Label Selector

- Kubernetes Taints and Tolerations

- Kubernetes Node Selector

- Kubernetes Node Affinity

- Kubernetes Resource Limits and Requests

- Kubernetes Default Memory and CPU Requests and Limits

- Kubernetes Pod Quota for a Namespace

- Kubernetes DaemonSets

- Kubernetes Static Pods

- Kubernetes Multiple Schedulers

- Kubernetes Scheduler Profiles

- Monitoring Kubernetes Cluster

- Kubernetes Logging

- Kubernetes Rollouts

- Kubernetes Strategy

- Create Kubernetes Deployments, Get Deployments, and Kubernetes Rollout Status

- Kubernetes Commands and Arguments

kubernetes label selector

Labels: Labels are attached to kubernetes objects as tags and are in the form of key and value.

Selectors: To select some of the kubernetes objects then you must use selectors.

To select the pods using the command line then use the below command. The no header is used to remove the heading.

kubectl get pods --selector app=App1

kubectl get pods --selector 'env=dev'

kubectl get pods --selector 'env=prod' --no-headers | wc -l

kubectl get all --selector 'env=prod' --no-headers | wc -l

kubectl get pods --selector 'env=prod,bu=finance,tier=frontend' --no-headers | wc -l

For example the labels within a replicaset manifest file are defined at two places, one under template which are pods label and the other one under metadata.

apiVersion: apps/v1

kind: ReplicaSet

metadata:

name: nginx-replicasets

labels:

app:nginx

spec:

replicas: 3

selector:

matchLabels: # Replicaset Label To create replicasets only when it matches label app: nginx

app: nginx

template:

metadata:

labels: # Container label app: nginx

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.14.2

ports:

- containerPort: 80

Kubernetes Taints and Tolerations

Taint means It is a protection from a particular impact or an issue and Tolerant means the taint applied to a node is acceptable and non-impacting, however intolerant is impacting and causes issues so that’s why node doesn’t accept it.

Tolerations are set to the pods while taints are set to the nodes. Tolerations allow the scheduler to schedule pods with matching taints.

You add a taint to a node using kubectl taint. For example,

kubectl taint nodes node-name key=value:taint-effect

kubectl taint nodes node1 key1=value1:NoSchedule

There are three taint effects to those pods which are intolerable.

- NoSchedule: New Pods will not be scheduled to the node.

- PreferNoSchedule: System will try not to schedule the pod on the node.

- NoExecute: New pods will not be scheduled on the nodes and old pods will be evicted if intoleratble.

Important Point: Why doesn’t pods are assigned to master node and only to worker node because taint is applied to the master node. To verify this statement run the below command.

Kubectl describe node kubemaster | grep taint

controlplane ~ ✖ kubectl describe node controlplane | grep Taints

Taints: node-role.kubernetes.io/control-plane:NoSchedule

How to remove the taint from the node

controlplane ~ ✖ kubectl taint node controlplane node-role.kubernetes.io/control-plane:NoSchedule-

node/controlplane untainted

Below is the Kubernetes manifest file that contains the example where tolerations are applied on the pods.

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

env: test

spec:

containers:

- name: nginx

image: nginx

imagePullPolicy: IfNotPresent

tolerations:

- key: "example-key"

operator: "Exists"

effect: "NoSchedule"

If you schedule a pod on a taint node without adding tolerate on pods then you will see below error messages:

Warning FailedScheduling 59s default-scheduler 0/2 nodes are available: 1 node(s) had untolerated taint {node-role.kubernetes.io/control-plane: }, 1 node(s) had untolerated taint {spray: mortein}. preemption: 0/2 nodes are available: 2 Preemption is not helpful for scheduling..

kubernetes node selector

Node selector is used so that the pods that you wish to run on specific nodes are aligned properly. To do that you need to add a property nodeSelector under the spec section in the manifest file. But before doing that make sure you add proper labels to the node as shown below.

Kubectl label nodes <node-name> <label-key>=<label-value>

Kubectl label nodes node-1 size=Large

The manifest file looks like below.

apiVersion: v1

kind: Pod

metadata:

name: myapp-pod

spec:

containers:

- name: nginx

image: nginx

nodeSelector:

size: Large

kubernetes node affinity

Node affinity also server the same purpose as node selector by selecting a particular node for pod however it has additional features as compared to node selector such as if we need to align a pod to a medium or large pod , small or medium both sized nodes then you must use Node affinity.

apiVersion: v1

kind: Pod

metadata:

name: with-node-affinity

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: topology.kubernetes.io/zone

operator: In

values:

- antarctica-east1

- antarctica-west1

- key: size

operator: NotIn/In/Exists

values:

- Small

containers:

- name: with-node-affinity

image: registry.k8s.io/pause:2.0

kubernetes resource limits and kubernetes resource requests

There are two resources types within kubernetes i.e CPU and memory which are needed for Pods. According to the need of the Pod you can decide where to place the pod and on which node based on resource type.

The limit or request is per pod that is it is per container. The rq

---

apiVersion: v1

kind: Pod

metadata:

name: frontend

spec:

containers:

- name: app

image: images.my-company.example/app:v4

resources:

requests:

memory: "64Mi"

cpu: "250m"

limits:

memory: "128Mi"

cpu: "500m"

- name: log-aggregator

image: images.my-company.example/log-aggregator:v6

resources:

requests:

memory: "64Mi"

cpu: "250m"

limits:

memory: "128Mi"

cpu: "500m"

Kubernetes Default Memory and CPU Requests and Limits,

By default, on the namespace, you can set the default request and limit values under spec section. Also you can define the minimum and maximum memory and CPU constrains for a namespace.

lets checkout the below manifest file.

apiVersion: v1

kind: LimitRange

metadata:

name: cpu-limit-range

spec:

limits:

- default:

cpu: 1

memory: 512Mi

- max:

memory: 1Gi

cpu: "800m"

min:

memory: 500Mi

cpu: "200m"

defaultRequest:

cpu: 0.5

memory: 256Mi

type: Container

Kubernetes Pod Quota for a Namespace

If you need to set a quota for the total number of Pods that can run in a Namespace then consider specifying quotas in a ResourceQuota object.

apiVersion: v1

kind: ResourceQuota

metadata:

name: pod-demo

spec:

hard:

pods: "2"

Kubernetes DaemonSets

Kubernetes Daemonsets allows and makes sure to run the copy of pods in each node in the kubernetes cluster. As new nodes are added the pods are replicated to each node. Daemonsets are created by kube-api server.

Some of the use cases of kubernetes Daemonsets are: To deploy the log agent in each node,

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd-elasticsearch

namespace: kube-system

labels:

k8s-app: fluentd-logging

spec:

selector:

matchLabels:

name: fluentd-elasticsearch

template:

metadata:

labels:

name: fluentd-elasticsearch

spec:

tolerations:

# these tolerations are to have the daemonset runnable on control plane nodes

# remove them if your control plane nodes should not run pods

- key: node-role.kubernetes.io/control-plane

operator: Exists

effect: NoSchedule

- key: node-role.kubernetes.io/master

operator: Exists

effect: NoSchedule

containers:

- name: fluentd-elasticsearch

image: quay.io/fluentd_elasticsearch/fluentd:v2.5.2

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

To create the Daemonsets using the kubectl create command use the below command and then later edit the yaml file and update the kind as we dont have create command for daemonsets.

kubectl create deployment elasticsearch --image=registry.k8s.io/fluentd-elasticsearch:1.20 -n kube-system --dry-run=client -o yaml > daemon.yaml

Kubernetes Static Pods

In kubernetes, lets say we just have worker node where kubelet is configured then deploying a pod on the node is known as static pods. The use case is you can launch control panel by adding controller manager , api server and etcd components by their own manifest file and run them as a pod on a node rather than installing it as a binary.

The same way kubeadm tool setups all the pods in the kubernetes cluster in the kube-system namespace. Static pods are created by kubelet.

- The static pod is defined on the below path in the kubelet service.

--pod-manifest-path=/etc/kubernetes/manifests

--config=kubeconfig.yaml // You can define path within kubeconfig.yaml by adding staticPodPath

/var/lib/kubelet // Checkout config.yaml here and check staticPodPath

- To view the pod you will not use kubectl command rather you will use docker ps command as we dont have kubernetes cluster.

- But you will see a read only pods in the kubectl command line when you have kubernetes cluster.

The static pods have the node name appended at the end of the name.

Kubernetes Multiple Schedulers

- The Scheduler file can represented as below. The leaderElect here means which scheduler will be the leader in all the schedulors.

apiVersion: Kubescheduler.config.k8s.io/v1

kind: KubeschedulerConfiguration

profiles:

- schedulerName: default-scheduler

leaderElection:

leaderElect: true

resourceNamespace: kube-system

resourceName: lock-object-mysched

When you run the scheduler using the kubeadm tool it uses the binary file and then runs the scheduler, to deploy additional scheduler you can download the binary multiple times and change the configuration as follows:

- Create my-scheduler2 file , change the below configuration as follows:

ExecStart = /usr/local/bin/kube-scheduler --config=/etc/kubernetes/config/my-scheduler-2-config.yaml

- Create my-scheduler3 file , change the below configuration as follows:

ExecStart = /usr/local/bin/kube-scheduler --config=/etc/kubernetes/config/my-scheduler-3-config.yaml

- To deploy the scheduler as pod create the file as below and deploy.

apiVersion: v1

kind: Pod

metadata:

name: my-custom-scheduler

spec:

containers:

- name: app

image: images.my-company.example/app:v4

- command:

- kube-scheduler

- --address=127.0.0.1

- --kubeconfig=/etc/kubernetes/scheduler.conf

- --config=/etc/kubernetes/my-schedulor-config-yaml

- To check the logs of the scheduler you may run the below commands.

kubectl logs my-custom-schedulor --name-space=kube-system

- If you need to use configmap as a volume to a kubernetes scheduler then create the configmap before creating the To create the config required for scheduler you need to run the below command.

kubectl create configmap my-scheduler-config --from-file=/root/my-scheduler-config.yaml -n kube-system

- To get the image of scheduler pod run the below command.

kubectl describe pod kube-scheduler-controlplane -n kube-system | grep Image

Kubernetes Scheduler Profiles

The Scheduler profile allows you to run multiple schedulers using the same manifest file. In the below manifest file you can see that there are two schedulers named my-scheduler1 and my-scheduler2.

apiVersion: v1

kind: Pod

metadata:

name: nginx

profiles:

- schedulerName: my-scheduler1

plugins:

score:

disabled:

name:

enabled:

name:

- schedulerName: my-scheduler2

plugins:

score:

disabled:

name:

enabled:

name:

There are some plugins that helps in scheduling the pods such as:

- Scheduling Queue: Here, priorityclass is checked

- Filtering: NodeResourcesFit, NodeName ( Again nodename property), NodeUnschedulable ( if you set the property as unschedulable as true that means its unscheduled)

- Scoring and Bindings.

All the plugins are attached to the pod through the extension points. Some of the examples of extension points are Queuesort , Prefilter, filter, postfilter, prescore, score, reserve, permit, prebind, bind, postBind.

- To define which pods have high priority and how much we need to add a property named: priorityClassName

apiVersion: v1

kind: Pod

metadata:

name: nginx

spec:

priorityClassName: high-priority

schedulerName: my-scheduler

containers:

- image: nginx

name: nginx

- To define the high priority, you must first create the priority class as follows.

apiVersion: scheduling.k8s.io/v1

kind: PriorityClass

metadata:

name: high-priority

value: 1000000

globalDefault: false

Monitoring Kubernetes cluster

Earlier to monitor the kubernetes cluster heapster was utilized however it is now deprecated. Now we use metrics server to monitor entire health of cluster and its components using metrics server. Metrics server is in memory.

Within each of worker node there is kubelet and kubelet also contains another component known as cAdvisor. cAdvisor (container Advisor) is responsible for gathering the performance metrics of the pod and sending it to back to metrics server using the kubelet api.

- You can deploy the metrics server using the below link.

git clone https://github.com/kubernetes-incubator/metrics-serve.git

- Now run the below command. The command will deploy multiple pods.

kubectl create -f deploy/1.8+

- Next to check if metrics server is able to do the performance, run below command. The command will give all the details such as CPU m memory consumption of node and pod.

kubectl top node

kubectl top pod

Kubernetes Logging

Kubectl create -f event.yaml # To deploy the Kubernetes Pod

Kubectl logs -f events-pod # To check the logs of the Kubernetes pods.

Let’s say we have multiple containers in the Kubernetes cluster then run the below command to fetch the logs of all the containers

Kubectl logs -f events-pods sales-pod financial-pod

Kubernetes Rollouts

- When you first deploy the deployment a rollout version revision 1 is created.

- For next deployment another rollout version is created and is revision2.

- To check the status of the rollout deployment run the below command.

Kubectl rollout status deployment/myapp-deployment

Kubectl rollout history deployment/myapp-deployment

Kubernetes Strategy

There are two strategy which kubernetes use

- Recreate strategy – It redeploys the pods in one go that means it removes all the pods and then recreates all the pods. With this strategy when you run the below command you will notice that in the message box you will see Scaled up replicas set to 0 will happen initially and then again It comes back to the number of replicas defined..

kubectl describe <deployment-name>

- Rolling update (Default) – It redeploys the pods one by one. With this strategy when you run the below command you will notice that in the message box you will see Scaled up replicas set to 1,2,3,4,5 but never 0.

kubectl describe <deployment-name>

You can undo the rollback from new version to old version by running the previous command.

Kubectl rollout undo deployment/myapp-deployment

Create Kubernetes deployments, Get deployments and Kubernetes rollout status

Kubectl create -f deploy.yaml # To create the deployment

Kubectl get deployments # To get the deployment

Kubectl apply -f deploy.yaml # To update the deployment

Kubectl set image deployments/myapp-deployments nginx=nginx:1.9.1 # To update the image but wont change the yaml file.

Example: kubectl set image deploy frontend <container-name>=<image-name>

Kubectl rollout status deployment/myapp-deployments # To check the rollout status of the deployment

Kubectl rollout history deployment # To check the history of the rollout and the deployment

Kubectl rollout undo deployment # To reverse the rollout of the deployment

By Default Docker doesn’t attach container to terminal so when you run docker run ubuntu command it doesn’t find any terminal and exists. So its better to append another command at the end.

Kubernetes Commands and Arguments

Commands and arguments are very important and crucial things while running container or while running pods in kubernetes clusters. Lets consider an example of it by viewing a Dockerfile and Pod definition file.

- The Dockerfile below is shown below.

FROM Ubuntu

ENTRYPOINT ["sleep"]

CMD["5"]

- The Pod Manifest file is shown below

apiVersion: apps/v1

kind: Pod

metadata:

name: ubuntu-pod

spec:

containers:

- name: ubuntu-container

image: ubuntu-image

command: ["sleep 2.0"] # This is equivalent to Entry point

args: ["10"] # This is equivalent to Args

- Create a pod with the ubuntu image to run a container to sleep for 5000 seconds. Modify the file

ubuntu-sleeper-2.yaml.

apiVersion: v1

kind: Pod

metadata:

name: ubuntu-sleeper-2

spec:

containers:

- name: ubuntu

image: ubuntu

command: ["sleep"]

args: ["5000"]

apiVersion: v1

kind: Pod

metadata:

name: ubuntu-sleeper-2

spec:

containers:

- name: ubuntu

image: ubuntu

command: ["sleep", "5000"]

apiVersion: v1

kind: Pod

metadata:

name: ubuntu-sleeper-2

spec:

containers:

- name: ubuntu

image: ubuntu

command:

- "sleep"

- "5000"

- The command overrides the Entrypoint and if you have arguments then that will override the commands.